Code to Racist Technologies

An Interactive Essay about Bias TechnologiesOverview

Code to Racist Technologies is a subversive argument against technologies developed without thoughtful considerations of implications. It is also an exploration in communicating information and narrations through interactive media. The project is a work-in-progress that aims to start conversations about racism through colorism in technology.

This project is inspired by Ekene Ijeoma’s The Ethnic Filter, Joy Buolamwini’s research project Gender Shades, and Dr. Safiya Umoja Noble’s talk Algorithms of Oppression: How Search Engines Reinforce Racism at Data & Society.

Code to Racist Technologies is a subversive argument against technologies developed without thoughtful considerations of implications. It is also an exploration in communicating information and narrations through interactive media. The project is a work-in-progress that aims to start conversations about racism through colorism in technology.

This project is inspired by Ekene Ijeoma’s The Ethnic Filter, Joy Buolamwini’s research project Gender Shades, and Dr. Safiya Umoja Noble’s talk Algorithms of Oppression: How Search Engines Reinforce Racism at Data & Society.

Roles

Concept, Interaction & Visual Design

Tools

JavaScript, HTML/CSS

Concept, Interaction & Visual Design

Tools

JavaScript, HTML/CSS

Methodology

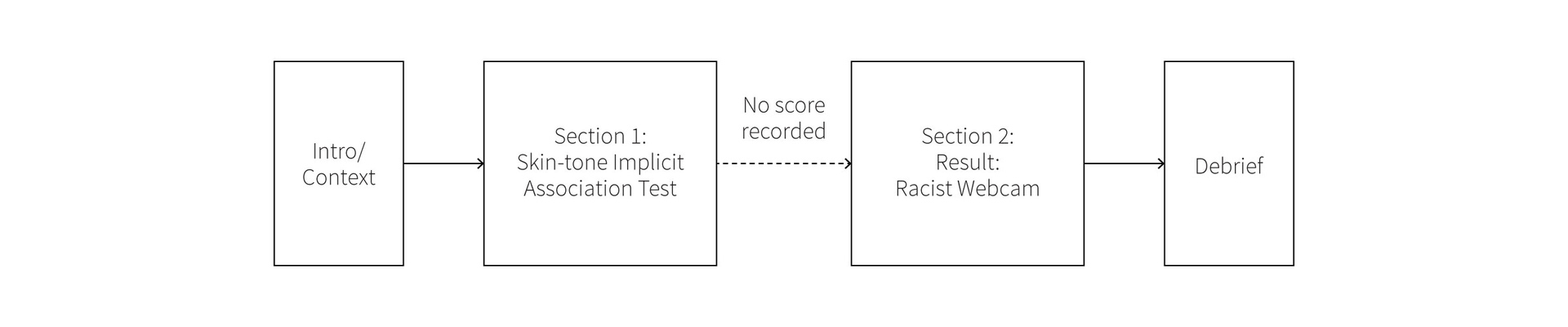

Read as an interactive essay, the project experience is two-folded. First, the participant completes an implicit bias test, derived from a similar test developed by researchers at Harvard, UVA and the University of Washington. Next, based on their test score, the participant sees a visualization of a facial recognition technology they developed, and how it impacts people with different skin tones.

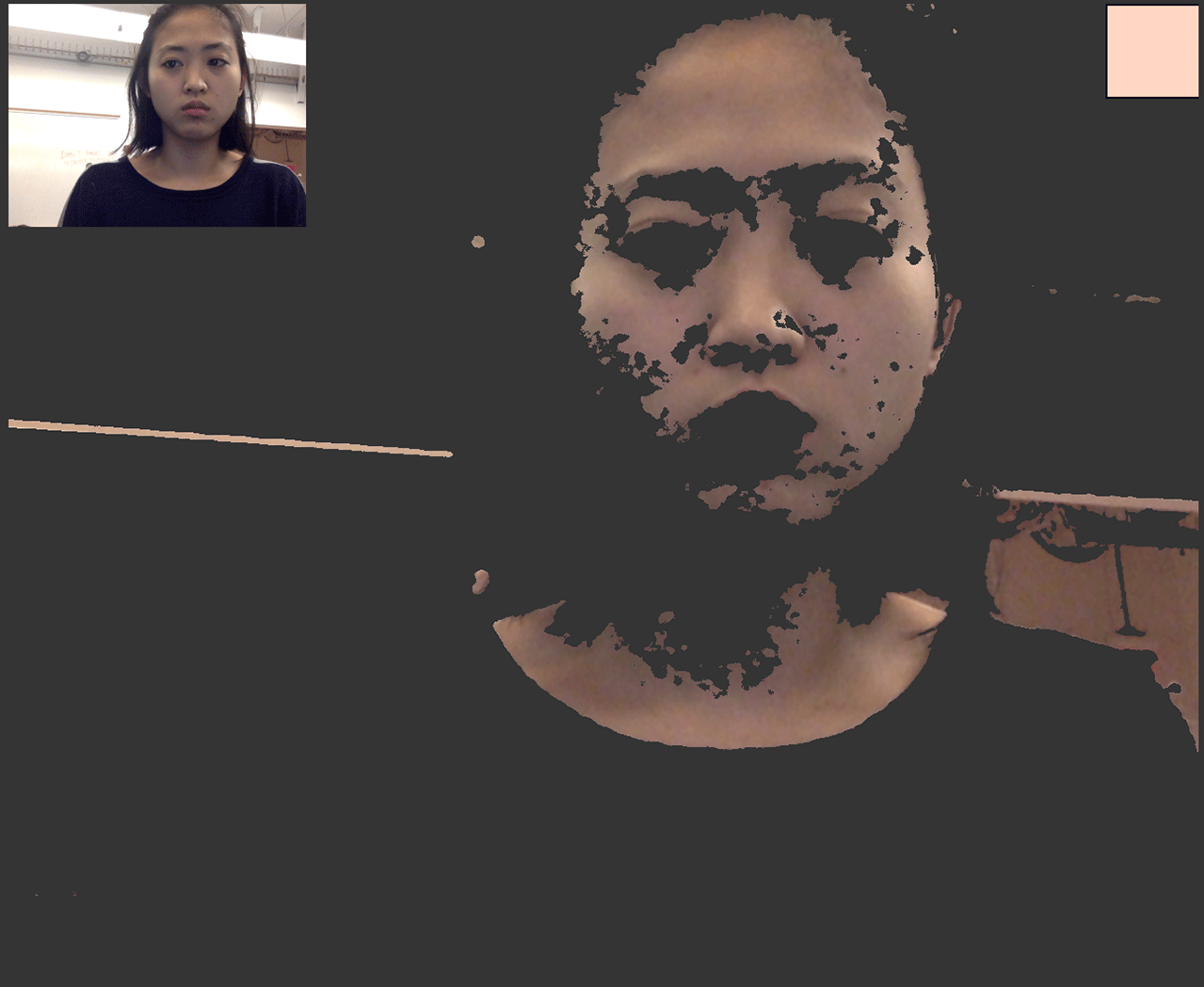

In reality, when I run the program, I do not record the user’s test result. Their result will always be shown as prefer light-skin relative to dark-skin, with a random percentage ranging from 80-90% that they will develop a technology bias against those with darker skin. I designed a “racist webcam” to visualize the technology the user develops. The webcam program isolates user’s skin tone from the background, determines where their skin tone falls on the Fitzpatrick Skin Type Classification, then map the value and change the degree of pixelation in their webcam capture. The darker your skin tone is, the more pixelated your video appears, and the less visible you are. It was my hope that by juxtaposing the user’s video result with other participants’ experience of the program, it will heighten the visual metaphor of the effects of racial bias on one’s visibility and voice.

In reality, when I run the program, I do not record the user’s test result. Their result will always be shown as prefer light-skin relative to dark-skin, with a random percentage ranging from 80-90% that they will develop a technology bias against those with darker skin. I designed a “racist webcam” to visualize the technology the user develops. The webcam program isolates user’s skin tone from the background, determines where their skin tone falls on the Fitzpatrick Skin Type Classification, then map the value and change the degree of pixelation in their webcam capture. The darker your skin tone is, the more pixelated your video appears, and the less visible you are. It was my hope that by juxtaposing the user’s video result with other participants’ experience of the program, it will heighten the visual metaphor of the effects of racial bias on one’s visibility and voice.

More on the "Racist Webcam"

I chose a combination of RGB and HSV color model to remove skin tone from the background after consulting current academic research. [1] The values in the algorithm I was referencing have been adjusted after user testing revealed that it does not read dark skin tones. I use variance(brightness) as the variable to change the degree of pixelation.

[1] S. Kolkur , D. Kalbande , P. Shimpi , C. Bapat , and J. Jatakia (2017). Human Skin Detection Using RGB, HSV and YCbCr Color Models. Retrieved from https://arxiv.org/pdf/1708.02694.pdf

[1] S. Kolkur , D. Kalbande , P. Shimpi , C. Bapat , and J. Jatakia (2017). Human Skin Detection Using RGB, HSV and YCbCr Color Models. Retrieved from https://arxiv.org/pdf/1708.02694.pdf

Approach

I approach the project from a particular perspective: that each and every one of us is biased, and without conscious and continuous awareness of our bias, we will continue to develop bias technologies/products, no matter how well-intended we are. To me, the code to racist technologies is us, especially the us who assume because we are “liberal” and “progressive”, we are not and will not be a part of the problem. I also created the project with a specific audience in mind: technologists — beginners and enthusiasts.

I approach the project from a particular perspective: that each and every one of us is biased, and without conscious and continuous awareness of our bias, we will continue to develop bias technologies/products, no matter how well-intended we are. To me, the code to racist technologies is us, especially the us who assume because we are “liberal” and “progressive”, we are not and will not be a part of the problem. I also created the project with a specific audience in mind: technologists — beginners and enthusiasts.

Reflection

I started this project to find answers but ended up with more questions: How should I speak about race to a reluctant audience? How does my position as an Asian woman influences my thought-process in defining race? Do I even have grounds to be speaking about this issue when I am not necessarily a minority in this industry?

My experience presenting the piece in class and in the show also demonstrates to me the limitation of the piece. I had drastically different experiences presenting my project to different people — some were offended, some thought the piece was relevant, some refuse to engage. In a way, it seems to be that the piece is preaching to the choir. I am uncertain if this is the right format conceptually in addressing issues with facial recognition and machine learning. (Should it have been a toolkit like Adam Harvey's AntiFace?)

I started this project to find answers but ended up with more questions: How should I speak about race to a reluctant audience? How does my position as an Asian woman influences my thought-process in defining race? Do I even have grounds to be speaking about this issue when I am not necessarily a minority in this industry?

My experience presenting the piece in class and in the show also demonstrates to me the limitation of the piece. I had drastically different experiences presenting my project to different people — some were offended, some thought the piece was relevant, some refuse to engage. In a way, it seems to be that the piece is preaching to the choir. I am uncertain if this is the right format conceptually in addressing issues with facial recognition and machine learning. (Should it have been a toolkit like Adam Harvey's AntiFace?)

Questions/Problems to consider:

- Confusing interaction with the webcam part: users are confused about what determines the pixelation, some thought it is determined by their skin tone

- Randomly generated number: Too arbitrary?

- Wording: Racist technologies vs Bias technologies?

Exhibition Setup

Process

I referenced a number of academic papers regarding how to remove skin tone, different researchers have used different color spaces, with HSV and YCbCr being the more popular ones. The paper that I first based my algorithm on uses a combination of RGB and HSV.

The code removes skin tone from the background, however, upon further testing, I realized the algorithm does not recognize individuals with darker skin tones. Through this process of trial and error, testing different lighting conditions, testing with different subjects, I began to gain a superficial understanding of how somewhat questionable decisions could be implemented in the process of design.

I spoke to Dan O'Sullivan as he had some experiences working with similar algorithms before Kinect was invented. Dan used normalized RGB, which in his case works well identifying light and dark skin tone but not olive skin tones. I did not implement normalized RGB in my program in the end because I needed a single variable to determine the degree of pixelation. Later, I spoke to MH about color spaces, and he recommended that I switch to HCL, which I hope to test in my next iteration.

I referenced a number of academic papers regarding how to remove skin tone, different researchers have used different color spaces, with HSV and YCbCr being the more popular ones. The paper that I first based my algorithm on uses a combination of RGB and HSV.

The code removes skin tone from the background, however, upon further testing, I realized the algorithm does not recognize individuals with darker skin tones. Through this process of trial and error, testing different lighting conditions, testing with different subjects, I began to gain a superficial understanding of how somewhat questionable decisions could be implemented in the process of design.

I spoke to Dan O'Sullivan as he had some experiences working with similar algorithms before Kinect was invented. Dan used normalized RGB, which in his case works well identifying light and dark skin tone but not olive skin tones. I did not implement normalized RGB in my program in the end because I needed a single variable to determine the degree of pixelation. Later, I spoke to MH about color spaces, and he recommended that I switch to HCL, which I hope to test in my next iteration.

Credits

This project would not be possible without the generous help from Mithru Vigneshwara.

This project would not be possible without the generous help from Mithru Vigneshwara.